Abstract

As it has often happened in the past, medicine may find itself in captivity of “pioneering and promising” technological trends and fashions. Indeed, while ChatGPT and its progeny may evolve and “become ready for primetime,” we may not – not now, and quite possibly, not ever as an entire species. This brings us back to what we started with: a true philosopher would question humans and not AI. Why do we want AI? Why do we think that we need it? Are we qualified and progressing ethically to first teach AI and then to be able to handle it, so much befriend it, and where is the evidence?

Citation

Rodigin A. Is medicine ready for ChatGPT – why not just ask ChatGPT?. Eur J Transl Clin Med. 2023;6(1):5-8Here we shall concern ourselves, though not overly so, with the potential (or lack thereof) of the artificial intelligence (AI) chatbot named ChatGPT (generative pretrained transformer) in healthcare, medical education and scientific writing.

Tradition states that medicine is a traditional field. If so, it is not surprising that, just like a kid whose parents for whatever reason forbid all desserts and candy, we may fall for the first available lollipop. With ChatGPT, the prize is a fresh piece of technology, and as such, it seems even more appealing. So we say to ourselves that we should forcefully and purposefully counterbalance our conservative nature. We cannot allow ourselves to fall behind, we fear. We need to use it. We cannot resist the wave of change, we conclude.

Recently the wind has carried the news that ChatGPT has passed the United States professional licensing exams for lawyers (the Uniform Bar Exam) and physicians (the USMLE) [1-2]. But is anyone shocked? Really? Let’s be honest: both of these exams rely on straight memorization (or instantaneous access to the Library of Congress, PubMed and Wikipedia). Therefore, why should anyone be astounded that both exams can be passed by a somewhat duller and self-unaware version of C-3PO*? And by the way, what did you feel like coming out of that room after taking the USMLE exam?

Instead of pondering what this means for AI and its future, perhaps we ought to focus on our educational future in the context of such exams altogether. Let’s face it: to date we have not really understood how human beings learn or how to teach them effectively. For decades we have simply carried on with the tradition of draconian multiple-choice tests as the St. Peter’s Gate to fellowship in our craft, social status and income.

But, no doubt (and to no avail), ChatGPT can be the cure for that too! Always polite and courteous, it will write, administer and grade uniform entrance tests, among others. It will then coach the unfortunate failures for next time: “perhaps retinal diseases are not your thing, Annie” as a delicate motherly consolation, “but my analysis of your testing pattern indicates that you have what it takes to be a babysitter extraordinaire.”

It is completely obvious why ChatGPT stirs both hype and controversy within society at large. ChatGPT is new, in a lot of ways unprecedented, popular, intriguing and available. ChatGPT now symbolizes the best and the worst of civilizational anticipation of AI: grandiose dreams, hopes, plans and fears on the one hand, and all the banalities of commonplace usage on the other. Not so obvious is the question why we are reading so many overly enthusiastic but premature and unfounded reactions, conclusions and expectations, particularly from those thought to be health professionals within their respective job cubicles [3-4].

Recently, while my daughter was playing at the park, I had a conversation about all matters AI in medicine with another parent, a professional data scientist who had finished medical school but decided to pursue research. Long story short, he listed numerous instances in his daily work where paradoxically the only force curbing unhindered misplaced excitement and pressure for AI model adaptation coming from physicians, hospital CEOs and other medical clients is his own scientific team. But how many times can one say “no” to a client who is paying you?

Incorrectly formulated clinical questions, horrible data sets, lack of adequate programming and data theory knowledge and unrealistic expectations of AI capabilities have become way too common in medicine already. Should the doctors who only read article abstracts on weekends be trusted with an AI tool? In adapting ChatGPT or a similar program for widespread use, be it medical education or actual clinical care of the patients, whose ethics are we to rely on drawing boundaries if not our own? Finally, the question whether socialized or commercialized medicine will be better at resisting the temptations offered by AI is not a comfortable question to face in the first place. What if neither one?

My own experience with ChatGPT so far is that it is very quick and efficient in retrieving information, saving you hours of eye strain. In my recent “conversation” with ChatGPT in roughly fifteen minutes, we “discussed” a lot of random topics: from bush planes and best local pizza places to sci-fi writer biographies and the gun control debate. I was not invested and did not care all that much about the validity of information I received, therefore I did not verify it. As an aside, ChatGPT miserably fails even rudimentary self-awareness criteria; not sure why anyone would question that – and I don’t mean Turing.

Others have reported ChatGPT to generate what we may call apologetic lies, though the medical term confabulations, as suggested by my park acquaintance above, is perhaps best. The chatbot apparently makes up stories, data and even full references [5]. As one example, an American physicist and philosopher recently had asked ChatGPT about a real Ukrainian philosopher – and obtained 100% false information including fine details about would-be personal life. After repeating the same question, ChatGPT provided a completely different biography of that same philosopher, also all false [6]. Ironically, being a fantasizing liar is one step closer on the path to self-awareness and “anthropomorphism,” not farther.

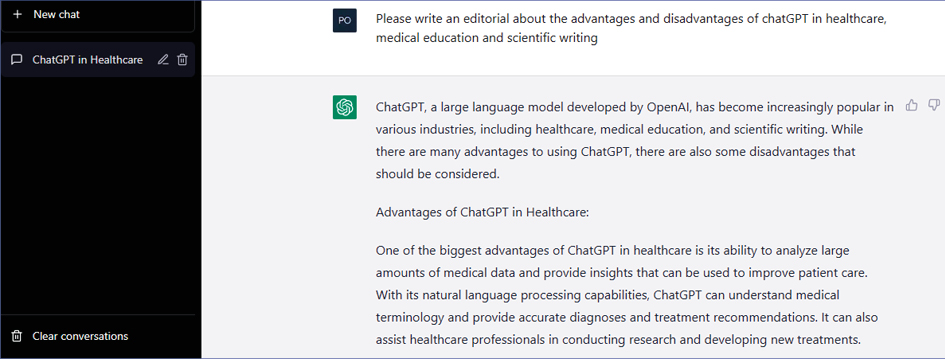

Naturally, I was unable to resist the temptation to ask ChatGPT to generate this short editorial about its use in healthcare and medical education. Here is its response:

Prompt for the Chat-GPT generated essay and beginning of the response. See full text here.

Without dwelling too much on what ChatGPT wrote (the full text is here), I cannot help but to ponder the following. First, what “insights” and “personalized recommendations” can be provided by a polite confabulator? Second, what more “important tasks” other than formulating and spelling out own conclusions should medical researchers be freed up to focus on? Third, as accurately self-disclosed, ChatGPT is in fact a tool and therefore it is subject to every bias of an AI system and the data quality provided to it. But as Capt. Pete “Maverick” Mitchell has repeatedly told us: “it’s not the plane, it’s the pilot” [7]. Last, all the wishy-washy talk about “improving patient care” and “enhancing medical education” really sounds like a poorly written medical school admission essay (no, I am not giving anyone any ideas!) that also discusses “helping people” and “serving humanity.” Might be true, but way too cliché.

In all honesty, any “analysis” of ChatGPT at this stage, even omitting the fact that it is a prototype, restricted in its scope and resources and is under development, will tend to place it into one of three ponds: Chat as a rather negative and evil, Chat as amazing and a panacea, or Chat as something in between, depending on this and that [5, 8]. The vast majority of opinions fall in the latter. While quite possibly correct, they are also very much predictable and as such, very unilluminating and boring. Citing the inevitability of AI development (but wait, that development does not just happen on its own – we’re the ones driving it!), and ChatGPT as a “game changer”, most will conclude with a limited cautious optimism toward implementation [9].

More introspective and even wiser articles would focus on ourselves, the people, and not the ChatGPT platform. Indeed, while ChatGPT and its progeny may evolve and “become ready for primetime,” we may not – not now, and quite possibly, not ever as an entire species [10]. This brings us back to what we started with: a true philosopher would question humans and not AI. Why do we want AI? Why do we think that we need it? Are we qualified and progressing ethically to first teach AI and then to be able to handle it, so much befriend it, and where is the evidence?

Thanks to multiple science fiction writers and film producers, thoughts of a benign friendly AI are by now deeply instilled in our minds and in our collective subconscious. From David 8 in “Prometheus” and Data in “Star Trek” to Sonny in “I, Robot” and Andrew Martin in “Bicentennial Man,” we seem to long for an autonomous, self-aware, amiable. but artificial companion. In the medical profession, we of course all want to hang with the benevolent and clinically omnipotent Baymax pet. Is this just another manifestation of our weaknesses and insecurities? Or is it yet another technological push in the hope to one day achieve immortality?

My final point is that while we can debate what ChatGPT may mean for and bring to medical student education, we should not forget what Dr. Google has already accomplished for our patients’ anxiety levels. Because it may very well turn out that while we are preoccupied with Chat’s future version 17.0 and its improvements, our patients will again be way ahead of us in Chat-diagnosing and Chat-prescribing.

As it has often happened in the past, medicine may find itself in captivity of “pioneering and promising” technological trends and fashions. In 2003 as a 2nd year medical student I succumbed to such pressure and purchased a then-state of the art Palm Pilot… and had never even opened the box. I wonder what Data and Baymax would say about that. By the way, can I still get a refund?

Disclaimer

All views are only of the author and not any affiliated organizations.

Funding

None.

Conflicts of interest

There is no conflict of interest in this project.

______________________________________________________________________

*The C-3PO is the iconic humanoid robot character from the “Star Wars” films.

References

| 1. |

Patrice J. New GPT-4 Passes All Sections Of The Uniform Bar Exam. Maybe This Will Finally Kill The Bar Exam. [Internet]. Above the Law. 2023 [cited 2023 Mar 27]. Available from: https://abovethelaw.com/2023/03/new-gpt-4-passes-all-sections-of-the-uniform-bar-exam-maybe-this-will-finally-kill-the-bar-exam/.

|

| 2. |

USMLE Program Discusses ChatGPT [Internet]. USMLE.org. 2023 [cited 2023 Mar 27]. Available from: https://www.usmle.org/usmle-program-discusses-chatgpt.

|

| 3. |

Patel SB, Lam K. ChatGPT: the future of discharge summaries? Lancet Digit Heal [Internet]. 2023 Mar;5(3):e107–8. Available from: https://linkinghub.elsevier.com/retrieve/pii/S2589750023000213.

|

| 4. |

Sabry Abdel-Messih M, Kamel Boulos MN. ChatGPT in Clinical Toxicology. JMIR Med Educ [Internet]. 2023 Mar 8;9:e46876. Available from: http://www.ncbi.nlm.nih.gov/pubmed/36867743.

|

| 5. |

Alkaissi H, McFarlane SI. Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. Cureus [Internet]. 2023 Feb 19;15(2). Available from: https://www.cureus.com/articles/138667-artificial-hallucinations-in-chatgpt-implications-in-scientific-writing.

|

| 6. |

Baumeister A. Jewish philosopher Evgeniy Baumeister, who was friends with Ivan Franko. ChatGPT as a researcher and conversationalist.” (quoted chat log generated by Alexey Burov, USA) [post in Russian] [Internet]. Patreon. 2023. p. March. Available from: https://www.patreon.com/andriibaumeister.

|

| 7. |

Kosinski J. Top Gun: Maverick. USA: Paramount Pictures; 2022.

|

| 8. |

Quintans-Júnior LJ, Gurgel RQ, Araújo AA de S, Correia D, Martins-Filho PR. ChatGPT: the new panacea of the academic world. Rev Soc Bras Med Trop [Internet]. 2023;56:e0060. Available from: http://www.ncbi.nlm.nih.gov/pubmed/36888781.

|

| 9. |

Xue VW, Lei P, Cho WC. The potential impact of ChatGPT in clinical and translational medicine. Clin Transl Med [Internet]. 2023 Mar;13(3):e1216. Available from: http://www.ncbi.nlm.nih.gov/pubmed/36856370.

|

| 10. |

The Lancet Digital Health. ChatGPT: friend or foe? Lancet Digit Heal [Internet]. 2023 Mar;5(3):e102. Available from: https://linkinghub.elsevier.com/retrieve/pii/S2589750023000237.

|